On this page

On this page

- How Prosper did evaluation

- Our success criteria

- Postdoc data collected (1) during project – a mix of quantitative and qualitative data

- Postdoc evaluation during the cohort

- Other in-cohort evaluation

- End of cohort exit or early leavers survey

- Post-cohort evaluation (longitudinal)

- Data analysis

- Discrepancies/oddities

- Reporting and publicising results

- Evaluation resources

- References

How Prosper did evaluation

Evaluation was an integral part of Prosper, contributing to the development model in multiple ways. Evaluation allowed us to continuously test and improve our co-created resources in response to the needs of our key audiences. Our evaluation spanned different audiences, considering the postdoc audience at different scales from considering the national postdoc population to local to cohort scales, and time spans, following the impact of Prosper on our postdoc cohorts in the short and long term.

Our success criteria

As Prosper was a project funded by Research England we had a set of agreed success criteria. Our evaluation allowed us to measure our progress against these criteria and was set up to measure the short and longer term impact of Prosper. A brief outline of these broad criteria is given below;

- Prosper model and associated suite of resources available via an open access portal is evaluated and tested

- Pilot cohort of postdoctoral researchers consider a broader range of career pathways

- Employer partners engaged with to inform postdoc career development

- Principal investigators (PIs) development model to enable and support postdocs to consider multiple career pathways

- Data on postdoc population and understanding the barriers to postdoc engagement with career development

Each of these broad success criteria was broken down into smaller, measurable deliverables within the project and post the project funding.

What we measured in postdocs

EDI including discipline, years of postdoc experience, time left on contract

Changes in confidence, attitudes and perceptions of career options

Engagement with career development opportunities (Prosper and other)

Future career plans and outcomes

Postdoc data collected (1) during project – a mix of quantitative and qualitative data

Initial baseline

Prosper began in October 2019, with our initial evaluation focussed on considering existing data on the national (HESA postdoc data) and in the literature global postdoc population data against the postdoc population in our three partner institutions (Lancaster University, the University of Liverpool and the University of Manchester).

This baseline data allowed us to set realistic EDI targets by discipline for recruitment to our two pilot cohorts.

See our blog for more detail on the postdoc population findings.

Additional document - Prosper evaluation framework.

A literature review of existing postdoc career development offerings was conducted. Several focus groups of postdocs and principal investigators (managers of researchers) from the University of Liverpool were held to identify and confirm relevant challenges and suggestions for Prosper to address. See this page for a summary of the literature review.

Recruiting (pre-cohort) postdoc evaluation

Recruitment to the two pilot cohorts required applicants to answer two questions about their motivation to join Prosper, provide details on their disciplinary area and EDI questions.

For full details on recruitment, including all questions asked, see our how to recruit a cohort page.

Postdoc evaluation during the cohort

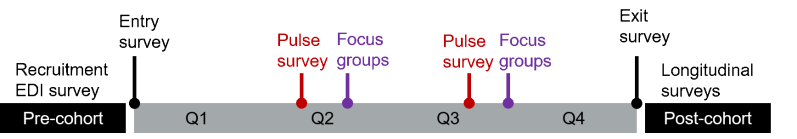

Each of Prosper’s two pilot cohorts were 12 months in duration. Part of our cohort participant agreement was to commit to provide feedback. Entry, exit and pulse survey data were all attributable, rather than anonymous.

For full details on the participant agreement, see our how to recruit a cohort page.

The main surveys issued to the cohort were;

Entry survey

The entry survey provided a baseline from which to measure the impact of Prosper on the cohort members compared to the exit (or early leavers) survey. Completing the entry survey and the participants’ agreement was compulsory to participants entering the cohort. The cohort members had two weeks to complete the survey and we sent a maximum of four follow-up prompt emails.

The entry survey recorded the EDI details of the participants such as gender, sexuality, caring responsibilities, ethnicity, disciplinary backgrounds, and years of experience as a postdoc. In addition, this survey traced the attitudes towards career development, opportunities beyond academia and the postdocs’ confidence to self-direct their own career development. The entry survey also included open ended questions which included space for postdocs to set their own goals for the first phase (approximately first three months) of their Prosper pilot. Including open-ended questions in the surveys provided a wealth of data which added depth to the quantitative findings of the surveys and helped us to cohesively and comprehensively present the impact of Prosper.

Pulse surveys

The Prosper pulse surveys were shorter than the comprehensive entry and exit surveys, and were primarily geared towards tracking engagement and feedback on the content and sessions. Feedback was collected on the time the participants spent on Prosper (including the time spent on different resources) and the experience of live delivered sessions. A descriptive analysis (of averages and percentages) of the pulse survey data was used by the Prosper team to define topics for cohort focus groups and modify or develop additional resources. In each 12-month pilot cohort, we issued two pulse surveys. The first pulse survey broadly marked the end of the Reflect phase (after around three months) and the second pulse survey broadly marked the conclusion of the Explore phase (after around six months). The Act phase was covered in the exit survey.

The first Prosper pulse survey enquired if the postdocs felt that they have progressed in the career development goals they set in the entry survey. Open ended questions were included in the pulse surveys to ask the postdocs to set goals for the next phase (~ three-month period). This pattern of asking postdocs to consider their progress towards their goals and setting new goals was followed in the second pulse survey.

As these pulse surveys were issued in between the entry and exit surveys we aimed for them to be completed by the cohort within two weeks of being issued. We sent up to a maximum of four reminder emails to ensure we achieved a minimum of 80% of the cohort completing the survey within a month of issue. Reminders were also posted in the cohort instant messaging (Slack) chat. However, we found we typically had to send several reminders to the postdocs to ensure that a minimum of 80% of the cohort completed the survey within a month.

Other in-cohort evaluation

Focus groups

Topics and commonly arising issues or barriers to engagement were determined from a mixture of the pulse survey data, interacting with the cohort and discussions with the career coaches. A small number of postdocs (typically 5 to 6) were invited to participate in a one hour long virtual focus group to discuss and explore the specific topic. A diverse and representative range of cohort postdocs were invited to participate in focus group discussions. Each session was facilitated by two members of the Prosper team, one primarily to facilitate, one to take notes. Prosper held focus groups on the following themes; time management, journaling, development of the Prosper portal, community building, career clusters and employer engagement, coaching, support from PIs, overall experience, and feedback. The Prosper team then discussed the best way to address the issues raised.

For more details on how to run a focus group see our page on running a focus group.

One-off session evaluation

We primarily sought feedback on one off sessions that had been commissioned by external suppliers to assess the quality and impact on the postdoc audience. These surveys were anonymous. However, we found that completion rate of these forms was poor, so instead we included a question listing all of the quarters sessions into the respective pulse and exit surveys. This did have the minor drawback in human error of the cohort misremembering which sessions they’d attended.

See an example of a typical survey used for evaluation of a one off session.

Buddy scheme evaluation

For details on how we ran a buddy scheme and an example of the anonymous feedback forms used see short duration and longer duration buddy schemes.

Portal feedback forms

Each page of our prototype online portal had a very basic anonymous feedback form at the end of the page. This feedback was automatically delivered to the Prosper inbox and included the specific webpage that it was filled in from.

A copy of the questions asked can be found here.

Direct feedback from cohort via email/instant messaging/verbal

Direct informal feedback was collected from the cohort members as well as other engaged stakeholders (postdocs, PIs and so on). This was shared and discussed within the Prosper team to see best how the feedback could be addressed or incorporated.

Reflective journaling

Journaling was recommended to the postdocs as means to aid self-reflection and tracing the evolution of their career development strategies and growth. The journal entries could be accessed by the individuals career coach, the Prosper team and the individual postdoc themselves. We found that the postdocs had a mixed response to journaling, with some really valuing it and some not finding it a useful practice for them. The fact that their journal entries were not fully private also dissuaded some postdocs from engaging with it. The reflective journal entries were a rich source of qualitative data for the Prosper team to understand mindset shifts, patterns of engagement and postdoc concerns during their personal career development journey. However, we found that analysing and anonymising this data was extremely time consuming.

See our blog post on our initial analysis of cohort 1 journals. See our journaling to increase your self-awareness page for more details and journal prompts.

End of cohort exit or early leavers survey

The exit survey recorded the impact of Prosper career development on postdocs as they concluded their engagement with the cohort. During both pilot cohorts the Prosper team developed two surveys; an early leavers survey completed by postdocs who left the cohort before the end due to varied reasons (such as end of their postdoc contract, and new jobs within and beyond academia) and an exit survey which was completed by postdocs who engaged with Prosper through to the end of the cohort (full 12 months).

Both the exit and early leavers surveys were very similar to each other and largely asked the same questions as the entry survey so the data could be directly compared. The questions and the order of the questions in the surveys was kept the same as the entry survey as far as possible. The exit and early leavers surveys included additional questions on the postdocs experience of applying for jobs within and/or beyond academia, their future plans and overall feedback on their experience of Prosper career development.

We found getting good response rates to the exit and early leavers surveys could be problematic as the postdocs may have left the institution, changed email address, or started a new role limiting their time and availability. Most early leavers completed the survey within one week. We ran the end of cohort surveys for two months with a maximum of four reminders being sent during this period.

Post-cohort evaluation (longitudinal)

The longitudinal survey was designed to collect data which addressed our success criteria such as applying for jobs (beyond and within academia), engaging with employers, engagement in career development and tracking the employment trajectory of former cohort members. This survey has been designed to allow us to track the long-term impact engaging with Prosper has had on the two pilot cohorts.

The first pilot cohort longitudinal survey was issued 7 months after the formal end of the cohort. In comparison to all the other surveys, this was the most challenging to get a good response rate as many of postdocs had moved on to new jobs. Therefore, this survey took 16 weeks to achieve a survey completion rate of 76% (40 out of 52 former members of the first cohort). This percentage completion rate is acceptable in long-term studies where the engagement rate decreases over time. We plan to issue a longitudinal survey to cohort 1 again 1 year after the end of cohort, 2 years after the end of cohort and 3 years after the end of cohort. We plan to do the same for the former members of cohort 2.

Data analysis

The way in which the quantitative data collected through surveys is analysed is determined by the evaluation design, the nature of the questions and data collected through the surveys.

Since we collected a combination of quantitative data (through surveys) and qualitative data (from surveys and focus group discussions), we used different strategies to analyse the different kinds of data.

For the quantitative data we did a combination of descriptive analysis (proportions, percentages and averages) for the majority of responses. We also used a more complex combination of factor analysis and analysis of variance (ANOVA) to measure changes in confidence.

Factor analysis: is a statistical analysis technique used to reduce large sets of correlated variables (data units or responses to questions) into a smaller number of groups or factors. This statistical tool can be executed on SPSS (Statistical Package for Social Sciences; a data management and analysis software developed by IBM) to group variables together into a single variable or factor. Grouping variables together in this way makes it easier to understand observed shifts. In our study we were able to condense 18 variables into 5 factors, which made it easier for us to understand and present our data.

ANOVA: is a statistical test which can be executed on SPSS and on Microsoft excel to examine the relationship between two or more groups of data and to test if our findings are statistically significant. In essence ANOVA allows you to test if there is a meaningful difference between your groups of data or not.

We thematically analysed the qualitative data collected across journal entries, focus group discussions and postdoc responses to open ended survey questions. This involved identifying how these qualitative data or texts responded to different themes. We also analysed this data to identify emergent themes. For instance, when we examined postdoc responses to impediments to engagement, we identified struggles with journaling, time management and a lack of a sense of belonging. These are themes which emerged from a particular open-ended question in a survey.

Additionally, we found that some survey questions generated responses which lent themselves to attractive presentation in the form of word-clouds. Questions we found particularly useful in this way were; asking postdocs to identify the most useful resource, what the primary limitation to their engagement with their Prosper career development was and what three words would they use to describe their experience of career development.

A sample report based on this evaluation for cohort 1 can be found here.

We would recommend that as a minimum a descriptive analysis of the quantitative evaluation data should be performed. This can be easily done using software such as Microsoft excel to calculate proportions, averages or percentages. Additionally, themes can be extracted from any qualitative data collected. We have prioritised the analysis and presentation of quantitative analysis in our reports. We have used our qualitative analysis to complement the quantitative analysis. You can combine the results and analysis of the results in your report or however you wish to disseminate your evaluation results.

Discrepancies/oddities

Human error (or caution) of those completing the surveys and questionnaires was noted. We found discrepancies between answers provided by some postdocs between the data collected at recruitment and on-boarding to the cohort. There were discrepancies in answers for university/institution, faculty, gender, disability and ethnicity. Some of these will be due to genuine error. There may have been some confusion in selecting a broad faculty definition, or they may have changed faculty in between surveys (~4 months in between surveys).

It’s known that disability, as an example, is underreported in academia (see our blog post), so this may be a factor for some of the discrepancies, with some individuals becoming more comfortable in revealing further information in a survey conducted once they’d been accepted onto the cohort.

We also noted some likely discrepancies in the pulse surveys on rating sessions. Despite session titles being included we believe some of those surveyed gave ratings based on the broad themes, rather than in relation to the specific session.

Reporting and publicising results

We wrote a report, blog and staff news article publicising the impact of our first pilot cohort. We also disseminated this report to other HEIs via either direct email to our contacts or via mailing lists, such as the R14 mailing list.

See the impact and report/s of Prosper’s two pilot cohorts here and first pilot cohort cohort blog. You can also download our two evaluation reports, post cohort 1 and cohort 2.

Evaluation resources

Below are links to examples of surveys we created. Please note that all surveys were created and hosted on Jisc online surveys. We’ve provided the examples in a word document format so you can readily adapt them.

- EDI collected at cohort recruitment

- Cohort Entry survey questions

- Cohort Pulse survey questions (two versions, one early in cohort and one later)

- Cohort Early-leavers survey questions

- Cohort Exit survey questions

- Cohort Longitudinal survey questions

- One-off session survey questions

- Buddy scheme survey questions (two examples for a buddy scheme running for a duration of ~ 11 weeks, and one running for a duration of around 3 to 4 weeks).

- Prosper evaluation reports; after cohort 1, after cohort 2.

References

Quantitative analysis references:

www.statisticshowto.com/factor-analysis

www.statisticshowto.com/probability-and-statistics/hypothesis-testing/anova/

Hadi, N.U., Abdullah, N. and Sentosa, I., 2016. An easy approach to exploratory factor analysis: Marketing perspective. Journal of Educational and Social Research, 6(1), p.215.

Pallant, Julie. 2003. SPSS Survival Manual: A Step by Step Guide to Data Analysis Using SPSS, McGraw-Hill Education, ProQuest Ebook Central.

17

minutes

17

minutes

Refine

Refine